AI SIMPLY EXPLAINED

All You Need To

Know About AI

What are generative agents?

Asmi Gulati

Today we will delve deeper into this genius research paper and explain key insights as simply as possible:

Imagine you're cruising down the streets of Los Santos in "Grand Theft Auto V". You've just pulled off a major heist, and the city should be just another game map to you. But what if the street vendor you knocked over last week remembers you and refuses to sell you that life-saving hotdog? Or the cop you evaded in a thrilling chase recognizes your vehicle and keeps an extra watchful eye on you? What if that pedestrian you once helped now hails a taxi for you when he sees you stranded? This isn't just another update; it could be the new future where characters evolve in response to your actions, all thanks to Generative Agents (or GAs, because in the fast-paced world of GTA, who has time for full names?).

Generative Agents are like the love child of your overthinking friend, who never forgets anything, and your ever-adapting roommate, who knows how to handle the twists life throws at them.

Now, what are generative agents even made of? You probably guessed right, it's LLMs (large language models)!

At its core, a generative LLM is like a neural network that's been trained on an enormous amount of text. The vastness of this training allows it to generate human-like responses. But for our Generative Agents to act and react like humans, they can't just rely on a static snapshot of knowledge(i.e their training data till some date). They need to 'live' and 'learn' continually. And that means we need to extend their memory capabilities short term and long term!

Think of GAs as having three parts: a diary where they jot down everything (that's the memory), a thinking cap where they ponder over these memories (reflection), and a planner to decide their next actions (planning).

Now our first task is giving these LLMs a memory and subsequently defining how to retrieve those memories.

Imagine You Ask ChatGPT for Book Recommendations

Challenge: Limited Context Window

Let's say you come to ChatGPT and ask, "Can you recommend me a book that I would enjoy?" With the standard limited context window, ChatGPT might request that you provide your preferences again or offer popular general recommendations without considering your past interactions.

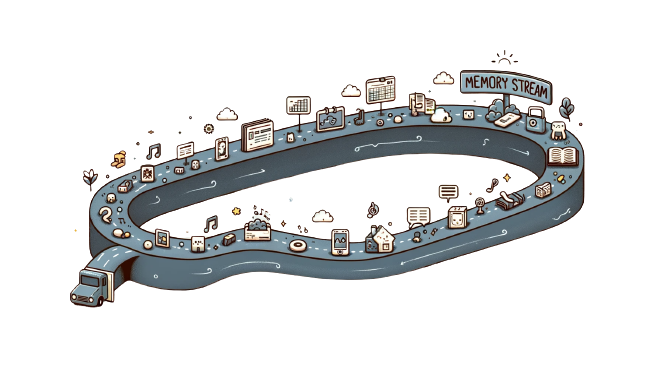

Approach: Memory Stream for Enhanced Retrieval

Now, picture ChatGPT equipped with a Memory Stream, holding a detailed record of your previous discussions about the books you've enjoyed and topics that interest you. For instance, this Memory Stream could include notes on:

- Your enjoyment of "1984" by George Orwell and your interest in dystopian novels.

- Your appreciation for complex characters and deep philosophical themes in literature.

- Your recent inquiry about historical fiction set during World War II.

Memory and Retrieval Function in Action

When you request a book recommendation this time, ChatGPT's memory retrieval function will spring into action:

It would rate all of ChatGPT's memories based on the below parameters:

- Recency: Your recent query about historical fiction would score highly on recency.

- Importance: Your preference for dystopian novels and philosophical themes would be deemed important as they contribute significantly to your reading satisfaction.

- Relevance: The relevance score would spike for memories related to past book discussions that align with your stated preferences.

Memories that wouldn't be as impactful in this context might include:

- A casual mention of weather preferences from several months ago (low recency).

- Recalling routine tasks such as grocery shopping or daily commutes (low importance).

- A past discussion on cooking recipes (low relevance to book recommendations).

This personalized response shows how an advanced version of ChatGPT, with a Memory Stream, would provide recommendations that are coherent with your ongoing conversation and understanding of your preferences.

Reflective Thinking in Large Language Models

Challenge:

Large Language Models (LLMs) like ChatGPT typically operate on immediate data and direct observations. When asked to make decisions or generate insights, they may not always consider the underlying patterns or the bigger picture. For instance, if an LLM is tasked with suggesting a hobby for you based on past conversations, it might default to the most frequently mentioned activity without considering your deeper interests or sentiments.

To transcend this limitation, LLMs can employ a reflective process, which allows them to analyze and synthesize underlying themes from past interactions.

Let's say you've interacted with an LLM over several sessions, discussing various topics such as gardening, literature, and travel. You've mentioned gardening many times, but always in the context of its challenges. Conversely, while you've talked about literature less frequently, your comments express joy and engagement.

In a reflective process, the LLM would 'look back' at these conversations and 'realize' that despite the frequency of gardening topics, the sentiment and depth of engagement were higher when discussing literature. It could then infer that literature, not gardening, aligns more closely with your passions and interests.

The LLM would periodically generate reflections based on significant events or interactions, identifying key patterns or themes. This is achieved by asking high-level questions and synthesizing insights from the answers. We will learn more about how to implement this in the next article along with the code!

Given your dialogue history, the LLM might generate a reflection such as: "You seem to have a strong affinity for storytelling and narrative experiences, as evidenced by your enthusiastic discussions about literature. This passion for storytelling might also extend to your interest in travel, where you explore not just new places but also their stories."

Planning

Now, can we make ChatGPT into an agent? We have given it a memory as well as the ability to reflect. Can we give it the ability to plan as well? Let's say you make ChatGPT play the role of a Software Engineer named "John Smith" who is a diligent man keen on maintaining a productive workflow. He has a penchant for tackling challenging coding problems, enjoys collaborating with his peers, and is proactive about professional development. John is also health-conscious and tries to integrate exercise into his busy schedule.

We aim to provide John with the ability to organize tasks, set goals, and adapt to new information over the course of a workday.

The plan for ChatGPT set in the beginning, as John, involves:

- Reviewing the agenda for the day, including work tasks and personal activities.

- Allocating blocks of time for focused coding sessions, considering optimal productivity periods.

- Scheduling meetings and collaborative work around existing commitments.

- Incorporating time for learning and professional development, such as reading articles or taking online courses.

- Setting aside time for exercise and relaxation, to maintain a healthy balance.

As new tasks or changes arise, ChatGPT-as-John reacts by reshuffling his plan. For instance, if an urgent bug arises, John may need to reprioritize his tasks, postponing a learning session to address the immediate issue. This showcases his adaptability and problem-solving skills.

John's day is dynamic. If a critical issue occurs, he can shift his schedule, moving less critical tasks to later in the day or even to the next day. His planning is not just about sticking to a schedule but also about responding to the needs of the moment.

With these enhancements, ChatGPT can simulate John Smith's day effectively, providing a believable and coherent narrative of a software engineer's life. It can plan, execute, and adapt the plan based on John's professional and personal priorities, just as a real individual would.

Park, J. S., O'Brien, J. C., Cai, C. J., Morris, M. R., Liang, P., & Bernstein, M. S. (2023). Generative Agents: Interactive Simulacra of Human Behavior. arXiv preprint arXiv:2304.03442. https://doi.org/10.48550/arXiv.2304.03442